John J. Hopfield of Princeton University and Geoffrey E. Hinton of the University of Toronto won the 2024 Nobel Prize in Physics for using tools from physics to power modern-day machine learning. The press release from the Nobel committee notes that—

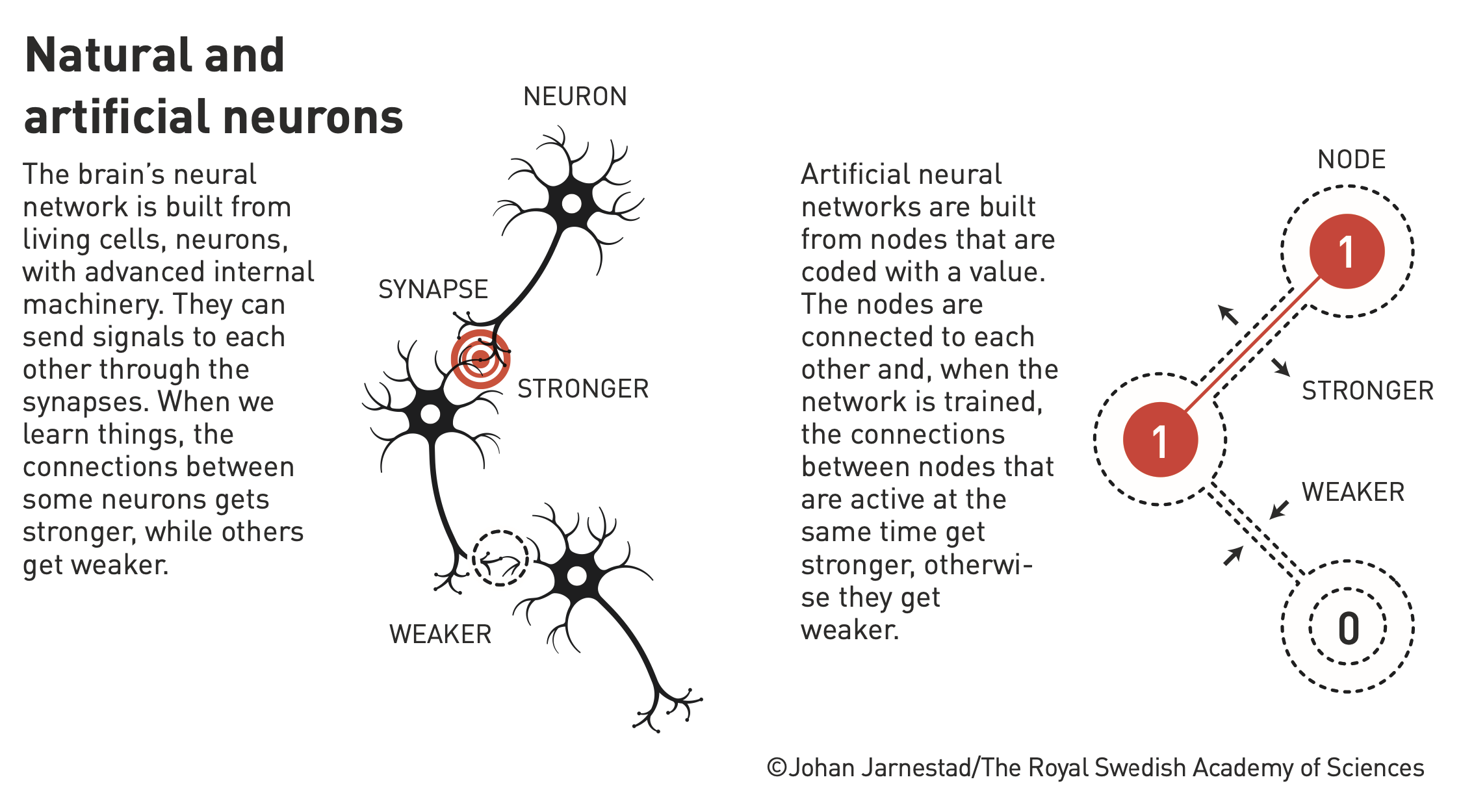

John Hopfield created an associative memory that can store and reconstruct images and other types of patterns in data. Geoffrey Hinton invented a method that can autonomously find properties in data, and so perform tasks such as identifying specific elements in pictures.

Press release from the Royal Swedish Academy of Sciences

Geoffrey Hinton along with Yann LeCun and Yoshua Bengio had previously won the Turing Prize in 2018 for their work on back-propagation for use in the neurosciences. Despite its use in fields outside of physics, John Hopfield's work has long been categorised primarily under statistical physics of disordered systems in the arXiv for condensed-matter physics. The model of neural networks used in modern-day AI and ML is however not the Hopfield or Hinton methods.

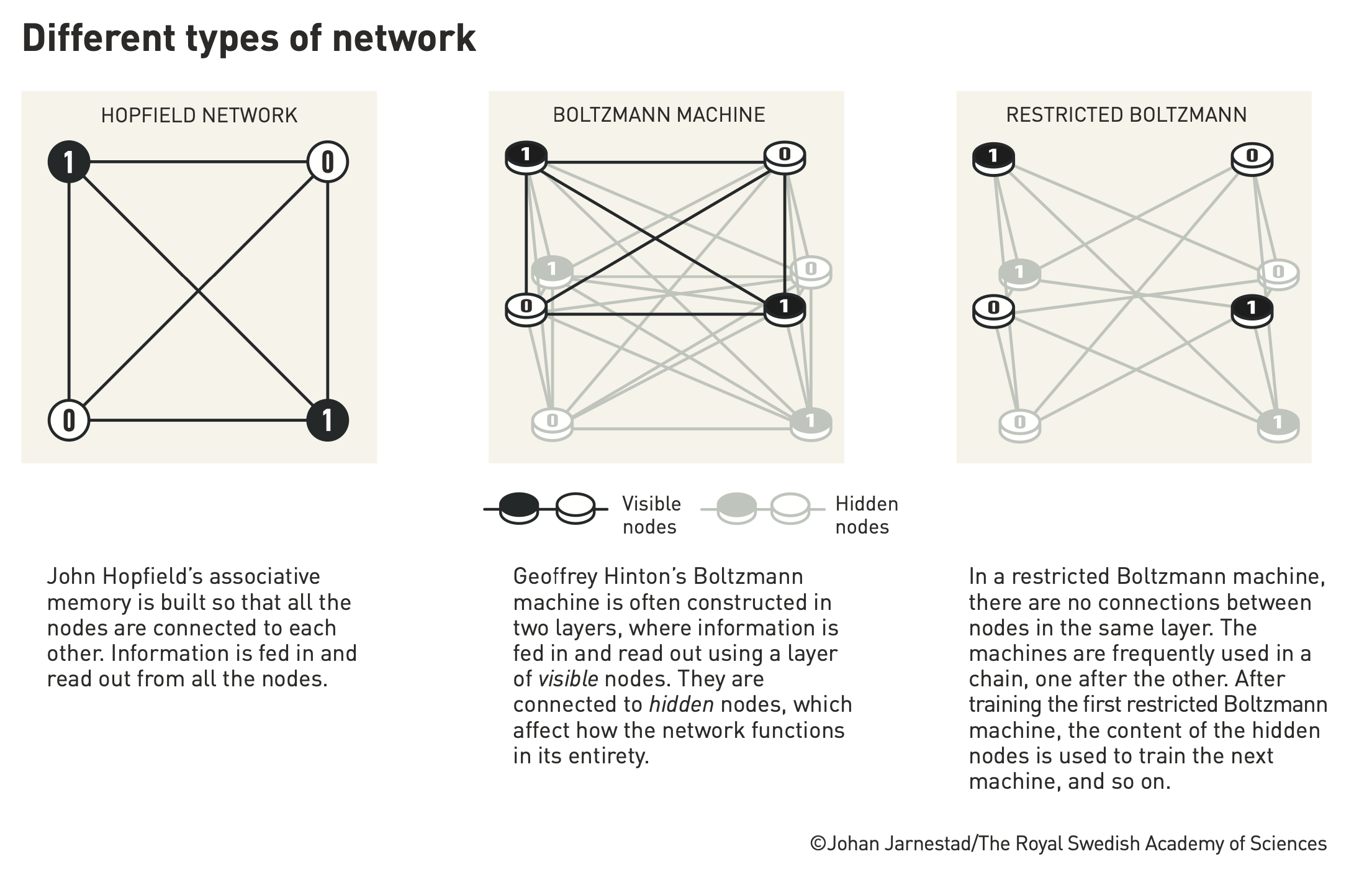

The Hopfield network employs spin-based descriptions of lattices where sets of nodes identify pieces of information by their association with low-energy states. In this manner, any piece of information fed to such a lattice will see the lattice entropically approach the pre-configured low-energy state thereby serving as a memory unit.

Hinton’s development of the Hopfield network led to Boltzmann Machines, which uses statistics to compute the likeliest output of the entire crystal (each of whose lattice points acts as a ‘node’ where the entire crystal is the neural network) by similarly computing the likeliest output from each node. This means a Boltzmann Machine can draw similarities in entirely new datasets based on the data sets on which it has been trained. This makes it possible, for example, to use machines to judge what music of films people might like based on their previous choices—which is also typically an example of where this work finds use today.

Further, the Bayesian probabilistic sampling model known as Gibb’s sampling can then be used to sample a subset of data from such a network rather than waiting for computation of the entire network to achieve comparably reliable results, which makes their use vastly more efficient.

Although during the announcement the Nobel committee pointed out that the use cases for these works is “as diverse as particle physics, materials science and astrophysics” the work is currently predominantly employed in more complex, altered forms in computer science. The use of the Hopfield network and of Boltzmann Machines is currently predominantly restricted to academia, with corporate development of ML preferring such methods as those for which Yann LeCun et. al. won the Turning Prize.

Notably, Hinton was a former VP and Engineering Fellow at Google and quit last year voicing concerns about the potential misuse of AI chatbots.